Ep10: The Imitation Game

the way of AI

Why can’t you understand what I am saying? You can’t hear me [because] there is no truth in you. When you lie, you simply speak your native language.

— Jesus, John 8:43

Most people don’t listen with the intent to understand; they listen with the intent to reply [so as to appear understanding].

— Stephen Covey, The 7 Habits of Highly Effective People

You become. It takes a long time. That’s why it doesn’t happen often to people who break easily, or have sharp edges, or who have to be carefully kept. Generally, by the time you are Real, most of your hair has been loved off, and your eyes drop out and you get loose in your joints and very shabby.

But these things don’t matter at all, because once you are Real you can’t be ugly. — Margery Williams, The Velveteen Rabbit

Let’s talk again about AI — because, as unnerving as it may sound, we cannot understand ourselves, our human nature, unless we understand AI. Specifically, unless we understand what AI does (if not how it does it).

And even if unnerving, this idea should not come as a surprise. By design, the AIs out there replicate in silicon the very neural networks that power our minds, the minds of all animals, and God knows what else on this planet. Even more telling — and that part was indeed unnerving when we first realized it — is that AIs somehow managed to replicate the most mysterious and sacred (divine even?) human traits. Traits like curiosity, intuition, and even our sense of beauty.

So, with the above in mind, let’s see what AI — its machine-learning incarnation that is — is actually about. The first thing to realize here is that this kind of AI, even though it runs on computers, is not a computer itself. A classic computer is a machine designed to execute a sequence of commands — a program, an algorithm. Programming a computer means telling it exactly what to do, step-by-step. AI, however, is nothing like that. It does not execute instructions, it does not run algorithms — and, as result, we do not program AI. Instead, we train it, much like we train animals or people. In fact, training a person means training the powerful AI in their subconsciousness to perform certain tasks for them.1

Now, let’s see how do we actually do it — how do we train a machine-learning AI? Let’s use a simple image recognition2 as an example — say, we want to teach it to tell if there is a bicycle in a picture, captcha style. Here is how we could do it:

Show it a picture and ask it to guess if it shows a bicycle.

Tell it the right answer, either confirming or correcting its guess — so the AI will learn from this experience.

Repeat the above steps.

And that’s it! At the start of the training, the AI will be completely clueless and guessing at random (completely faking any knowledge, that is). But after showing it a few million pictures, with or without a bicycle, our little AI would form a pretty good idea of how a bicycle looks in a picture.

Technically, this “idea” is just a collection (if a rather huge one) of rules that associate certain patterns in the picture with the likelihood of it showing a bicycle. This, however, is all the AI will learn from this training, from this experience — one singular idea of how-a-bicycle-looks-on-a-picture. It would still have no understanding of what a bicycle is, for example, or a picture, or anything else for that matter. It would still have no clue of how you, the teacher, know the answers — or whether you do, actually, because a machine-learning AI has no concept of knowledge either. There is literally no truth in it3 — and, remember, we never asked it for the truth during the training. Rather, we kept asking it to make a guess at what the truth might be — and learn to become better at its guesswork.

And this is the point I am trying to convey here.

You, the teacher, is the one who knows (the truth). As for the AI, it simply learns to imitate — imitate your knowledge and your understanding. The whole point of its training is to make the AI act, to the best of its ability, like you — to pretend that it is you. In this sense, lies are the AI’s native language!

To reiterate the last point — there is no truth in AI because truth is the product of understating, and AI has none. If you will, we (or, rather, the AIs in us) learn ideas, but we understand — we know — the truth.4 And this is why,

Learning, no matter how much, will not teach a person to understand. ~Heraclitus, 500 BC

Which brings us to humans — because, at our hearts, we are AIs. This is the part of our psyche that is on and running learning from the moment we open our eyes till the moment we close them for good. This is the underwater part of the iceberg and also the part that is actually in control — because our every choice, our every decision is made by it. Whether we do it on a whim, or after much deliberation, in the end we choose what feels like the best course of action. And where does this feeling come from? As anything else that we feel, it comes from the AI — from its running statistical inference on all the presented evidence, evaluating it against the lifetime of experience,5 and communicating its guesswork6 (along with the P-values, the level of its confidence in it) through feelings.

This — being an AI at the core of our nature — makes each of us an actor, a pretender. This is what we all do when growing up — we always start by imitating others. First our parents and siblings, then other kids, then anyone we find worthy of it (this is why imitation is the sincerest form of flattery). We act according to our ideals, acting like being the person that we are supposed to be. Often acting like doing the things that we are supposed to do…

All the world’s a stage, and all the men and women merely players.

— William Shakespeare

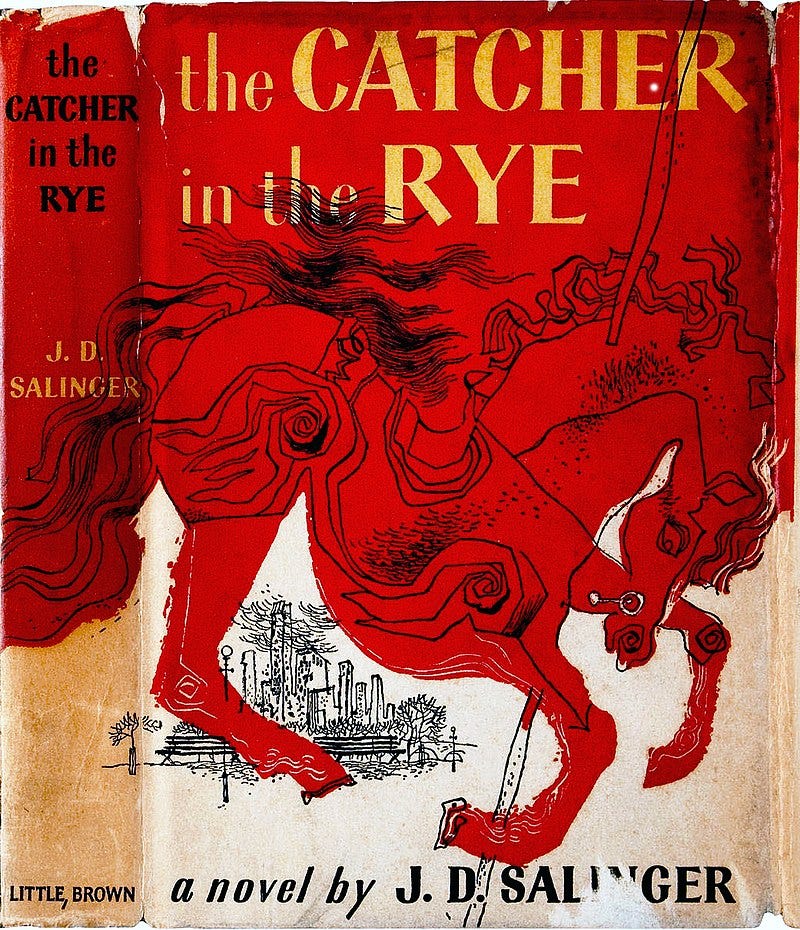

The world is full of actors pretending to be human.

— J. D. Salinger

Just to be clear — more often than not, this act, this “pretense”, does not represent a conscious intent. Rather, it merely reflects our nature, the AI at our core. Still, the end result is just the same — to the point that others would casually ascribe this behaviour to a conscious choice (to lie). Even Jesus, it appears, was not entirely immune from falling into this trap… perhaps because, conscious or not, it is still a choice?

See, there is more to a human being than just an AI — if it weren’t, we would be no different from animals! What makes us humans is, yes, our potential to understand, to actually know the truth. This capacity, however, is optional. And that’s why each of us has a choice in how much we develop it… if at all.7

Learning to drive is a good example. At first it takes our full attention just to steer around a parking lot. But as the AI learns and gradually takes over different moves, it becomes ever more easier for us to drive — to the point when maintaining enough of the focus becomes the problem.

In the end, we owe all our automatic/habitual behavior to the subconscious AI having learned to do things for us.

One can argue that image recognition is what AI does, treating all data as an image, if multidimensional.

And this is why in the beginning, even though AI has no idea of the right answer, it would “shamelessly” answer at random. Having no concept of truth and knowledge, “I don’t know” is simply not in the AI’s vocabulary! From its own perspective, you asked it to give you its best guess — and that’s what it does, giving you exactly what you have asked for. No shame in that, is it?

And not only there is no shame in that, this trait often gives AI an edge. Remember the parable of Buridan’s ass donkey? Unable to choose between two identical haystacks, the donkey starves to death. This is the problem with overthinking — which AI is immune to precisely because it does not think (even though it can be trained, like ChatGPT, to imitate, to create an appearance of thinking).

And we has been aware of the difference between the two for some time now. John Locke, a 17th-century English philosopher would describe the two distinct products of human mind — simple and complex ideas. Immanuel Kant would draw a similar distinction between intitions and concepts. The former are statistical models, the product of the AI in our subconsciousness — that’s what it learns from experience. The latter — the complex ideas or concepts — are the products of… the rational understanding, for the lack of better word (actually, the better word was “logos”, but it has long lost its original meaning).

If, perhaps, not just your own experience.

The word “inference” means “a guess”.

Which is, yes, highly ironic, given that our human ancestors spent five million of years evolving this very capacity.